S2-Transformer代码运行解析

2022-08-18

阅读:601

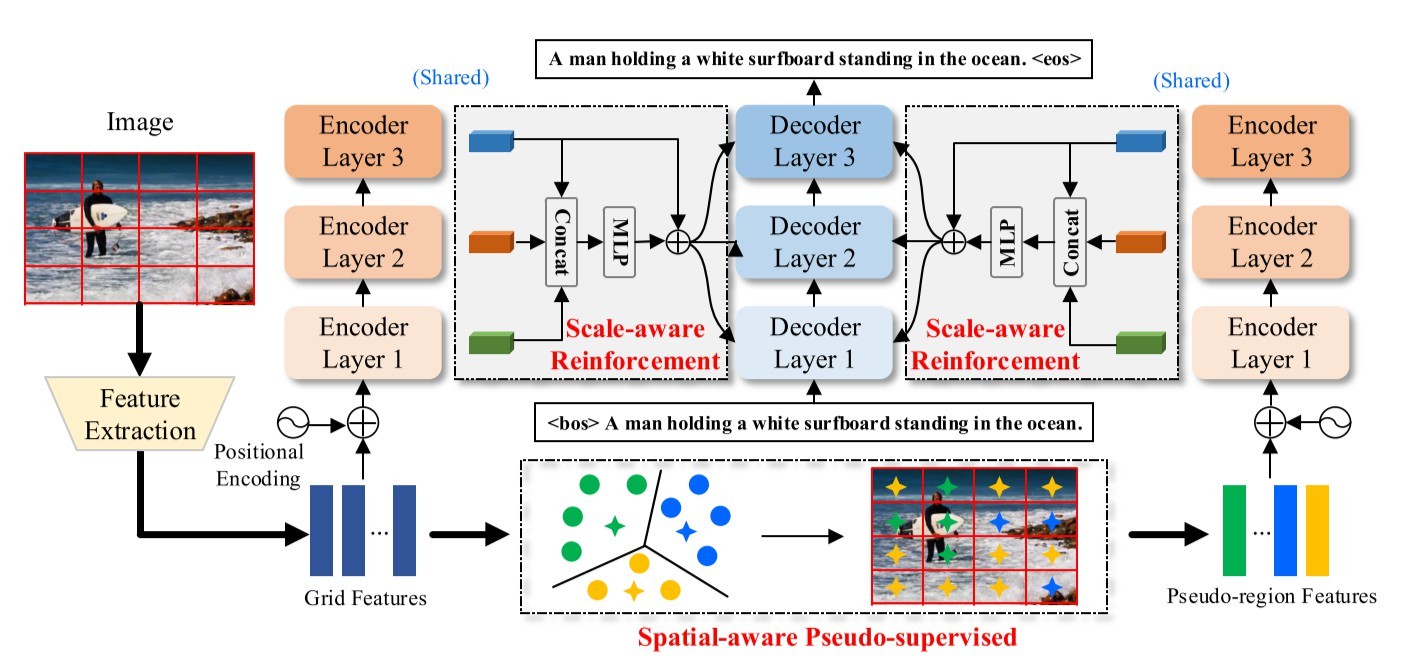

$S^2-Transformer$ 代码运行解析(基于 $M^2$ )

我改成了单 $Gpu$ 运行,忽略 $DDP\ Training$

TODO

- [✔] 2022-08-18

- [✔] $Start$

- [ ] 2022-09-12

运行main

train_trasnformer.py中,定义的多个可选参数,存在args里device = torch.device('cuda')

parser = argparse.ArgumentParser(description="Transformer")

parser.add_argument('--exp_name', type=str, default='s2')

...

args = parser.parse_args()print(args)

'''

annotation_folder='Data/annotations'

batch_size=50

dir_to_save_model='checkpoint/'

exp_name='s2'

features_path='Data/X101_grid_feats_coco_trainval.hdf5'

head=8

logs_folder='tensorboard_logs'

m=40 # 没使用

num_clusters=5

refine_epoch_rl=28

resume_best=False

resume_last=False

rl_base_lr=5e-06

text2text=0 # 没使用

warmup=10000

workers=0

xe_base_lr=0.0001

xe_least=15

xe_most=20

'''Train函数train(args)dir_to_save_model 、tensorboard_logs的目录是否存在,若不存在,则新建# preparation

if not os.path.exists(args.dir_to_save_model):

os.makedirs(args.dir_to_save_model)

if not os.path.exists(args.logs_folder):

os.makedirs(args.logs_folder)tensorboard,可视化训练过程from torch.utils.tensorboard import SummaryWriter

writer = SummaryWriter(log_dir=os.path.join(args.logs_folder, args.exp_name))image_field,表示图像特征的类# Pipeline for image regions

image_field = ImageDetectionsField(detections_path=args.features_path, max_detections=49, load_in_tmp=False)text_field,表示文本标注的类# Pipeline for text

text_field = TextField(init_token='<bos>', eos_token='<eos>', lower=True, tokenize='spacy', remove_punctuation=True, nopoints=False)COCO数据集# Create the dataset

dataset = COCO(image_field, text_field, 'coco/images/', args.annotation_folder, args.annotation_folder)

train_dataset, val_dataset, test_dataset = dataset.splitsvocab.pkl,字典,包含 $10201$ 个字符或字符串- 若存在,直接

load - 若不存在,则以出现次数大于 $5$ 次为条件,新建词表

if not os.path.isfile('vocab.pkl'):

print("Building vocabulary")

text_field.build_vocab(train_dataset, val_dataset, min_freq=5)

pickle.dump(text_field.vocab, open('vocab.pkl', 'wb'))

else:

print('Loading from vocabulary')

text_field.vocab = pickle.load(open('vocab.pkl', 'rb'))建模

创建modelencoder有自定义的参数EncoderLayer为 $3$ 层<pad>这个 $token$ 的index,为 $0$- 注意力模块

attention_module为原始的ScaledDotProductAttention attention_module_kwargs中的m=args.m=40并没有在这篇论文中使用,这是在 $M^2$中出现的超参数

decoder中的参数- 筛选后语料库词表的长度,为 $10201$

- 最长的句子长度为 $54$

DecoderLayer为 $3$ 层<pad>这个 $token$ 的index,为 $1$

Transformer的参数<bos>的index- 上面定义的

encoder - 上面定义的

decoder - 超参数,聚类的数目,文章中为 $5$

vocab的长度max_len为 $54$<pad>的indextext_dimension,投影的维度为 $512$

# Model and dataloaders

encoder = TransformerEncoder(3, 0, attention_module=ScaledDotProductAttention, attention_module_kwargs={'m': args.m})

decoder = TransformerDecoderLayer(len(text_field.vocab), 54, 3, text_field.vocab.stoi['<pad>']

model = Transformer(text_field.vocab.stoi['<bos>'], encoder, decoder, args.num_clusters, len(text_field.vocab), 54, text_field.vocab.stoi['<pad>'], 512).to(device)dataset的dict,在 $SCST$ 训练阶段使用dict_dataset_train = train_dataset.image_dictionary({'image': image_field, 'text': RawField(), 'add_text': text_field})

dict_dataset_val = val_dataset.image_dictionary({'image': image_field, 'text': RawField(), 'add_text': text_field})

dict_dataset_test = test_dataset.image_dictionary({'image': image_field, 'text': RawField(), 'add_text': text_field})ref_caps_train,是一个list,储存训练时的references$($或者叫 $labels)$,全是 $images$ 对应的 $sentences(str)$ref_caps_train = list(train_dataset.text())tokenizer.py的class PTBTokenizer(object)对ref_caps_train进行处理用到了 $Stanford\ corenlp$ 的 $Java\ jar$ 包,

return的是一个dict,包含所有的caps,caps是一个个的list,每个list包含一串str把这个

dict丢到class Cider()中,产生一个cider_train对象,用于 $SCST$ 的cider指标优化cider_train = Cider(PTBTokenizer.tokenize(ref_caps_train))def lambda_lr(s):

print("s:", s)

if s <= 3:

lr = args.xe_base_lr * s / 4

elif s <= 10:

lr = args.xe_base_lr

elif s <= 12:

lr = args.xe_base_lr * 0.2

else:

lr = args.xe_base_lr * 0.2 * 0.2

return lr

def lambda_lr_rl(s):

refine_epoch = args.refine_epoch_rl

print("rl_s:", s)

if s <= refine_epoch:

lr = args.rl_base_lr

elif s <= refine_epoch + 3:

lr = args.rl_base_lr * 0.2

elif s <= refine_epoch + 6:

lr = args.rl_base_lr * 0.2 * 0.2

else:

lr = args.rl_base_lr * 0.2 * 0.2 * 0.2

return lroptim = Adam(model.parameters(), lr=1, betas=(0.9, 0.98))

scheduler = LambdaLR(optim, lambda_lr)

optim_rl = Adam(model.parameters(), lr=1, betas=(0.9, 0.98))

scheduler_rl = LambdaLR(optim_rl, lambda_lr_rl)

# <pad>这个token不参加计算loss

loss_fn = NLLLoss(ignore_index=text_field.vocab.stoi['<pad>'])

# 第二个loss

loss_align = MSELoss()

loss = (loss_fn, loss_align)

use_rl = False

best_cider = .0

best_test_cider = 0.

patience = 0

start_epoch = 0if args.resume_last or args.resume_best:

if args.resume_last:

fname = os.path.join(args.dir_to_save_model, '%s_last.pth' % args.exp_name)

else:

fname = os.path.join(args.dir_to_save_model, '%s_best.pth' % args.exp_name)

# fname = 'checkpoint/s2_last.pth'

if os.path.exists(fname):

print("load model {}".format(fname))

data = torch.load(fname)

torch.set_rng_state(data['torch_rng_state'])

torch.cuda.set_rng_state(data['cuda_rng_state'])

np.random.set_state(data['numpy_rng_state'])

random.setstate(data['random_rng_state'])

model.load_state_dict(data['state_dict'], strict=False)

"""

optim.load_state_dict(data['optimizer'])

scheduler.load_state_dict(data['scheduler'])

"""

start_epoch = data['epoch'] + 1

best_cider = data['best_cider']

best_test_cider = data['best_test_cider']

patience = data['patience']

use_rl = data['use_rl']

if use_rl:

optim.load_state_dict(data['optimizer'])

scheduler.load_state_dict(data['scheduler'])

else:

optim_rl.load_state_dict(data['optimizer'])

scheduler_rl.load_state_dict(data['scheduler'])

print('Resuming from epoch %d, validation loss %f, best cider %f, and best_test_cider %f' % (data['epoch'], data['val_loss'], data['best_cider'], data['best_test_cider']))

print('patience:', data['patience'])

else:

print("no load model")开始训练

print("Training starts")

for e in range(start_epoch, start_epoch + 100):

...dataloader,用在 $XE$ 训练阶段dataloader_train = DataLoader(train_dataset, batch_size=args.batch_size, pin_memory=True, drop_last=False, num_workers=args.workers, shuffle=True, persistent_workers=True)

dataloader_val = DataLoader(val_dataset, batch_size=args.batch_size, shuffle=False, num_workers=args.workers)dict_dataloader,用在 $SCST$ 训练阶段dict_dataloader_train = DataLoader(dict_dataset_train, batch_size=args.batch_size // 5, pin_memory=True, drop_last=False, num_workers=args.workers, persistent_workers=True)

dict_dataloader_val = DataLoader(dict_dataset_val, batch_size=args.batch_size // 5)

dict_dataloader_test = DataLoader(dict_dataset_test, batch_size=args.batch_size // 5)loss function并不相同,并记录不同 $epoch$ 的 $logs$if not use_rl:

train_loss = train_xe(model, dataloader_train, optim, text_field)

writer.add_scalar('data/train_loss', train_loss, e)

else:

train_loss, reward, reward_baseline = train_scst(model, dict_dataloader_train, optim, cider_train, text_field)

writer.add_scalar('data/train_loss', train_loss, e)

writer.add_scalar('data/reward', reward, e)

writer.add_scalar('data/reward_baseline', reward_baseline, e)将

model、dataloader、optim等等,输入到训练函数中,求得一次 $epoch$ 迭代更新后的训练 $loss$ 首先遍历

dataloader,得到iteration,detections(就是key)和captions(就是value),detections的形状为(bs, max_detections, visual_dim) 把

detections作为images,captions作为sequences,输入给模型model;将梯度置 $0$;从第二个单词开始截取captions,也就是对应captions[:, 1:],captions是(batch_size, seq_len)的形状,保证截取的部分数据是一个连续内存,否则新开辟一块内存存储数据,将截取数据命名为captions_gt,也就是ground\ truth,它的形状是(batch_size, seq_len-1)。因为要预测captions,所以正好是错位的,在sequence中,上一个token预测下一个token out是模型的输出,为(batch_size, seq_len, vocab_len)的形状,然后截断seq_len最后一个token,因为要与captions_gt计算 $loss$,所以要截断一个token,保存为(batch_size, seq_len-1, vocab_len)把

out变换为(batch_size * (seq_len-1), vocab_len),把captions_gt变换为(batch_size * (seq_len-1)),输入到loss_function,这里的损失函数是NLLLoss(),计算 $loss$,然后反向传播更新参数out=(bs * (seq_len-1), vocab_len)captions_gt=(bs * (seq_len-1))

captions_gt视为 $labels$,是一维的 $tensor$;out是最后一层decoder的输出经过log_softmax的结果,经过softmax转为 $0\sim1$ 的正数,再取log后变为 $-\infty\sim0$ 的负数pbar在每次计算后,动态显示进度条def train_xe(model, dataloader, optim, text_field, scheduler, loss_fn, e):

# Training with cross-entropy

model.train()

scheduler.step()

# show learning late

print('lr = ', optim.state_dict()['param_groups'][0]['lr'])

running_loss = .0

with tqdm(desc='Epoch %d - train' % e, unit='it', total=len(dataloader)) as pbar:

for it, (detections, captions) in enumerate(dataloader):

detections, captions = detections.to(device), captions.to(device)

out = model(mode='xe', images=detections, seq=captions)

optim.zero_grad()

captions_gt = captions[:, 1:].contiguous()

out = out[:, :-1].contiguous()

loss = loss_fn(out.view(-1, len(text_field.vocab)), captions_gt.view(-1))

loss.backward()

optim.step()

this_loss = loss.item()

running_loss += this_loss

pbar.set_postfix(loss=running_loss / (it + 1))

pbar.update()

loss = running_loss / len(dataloader)

return lossXE训练阶段

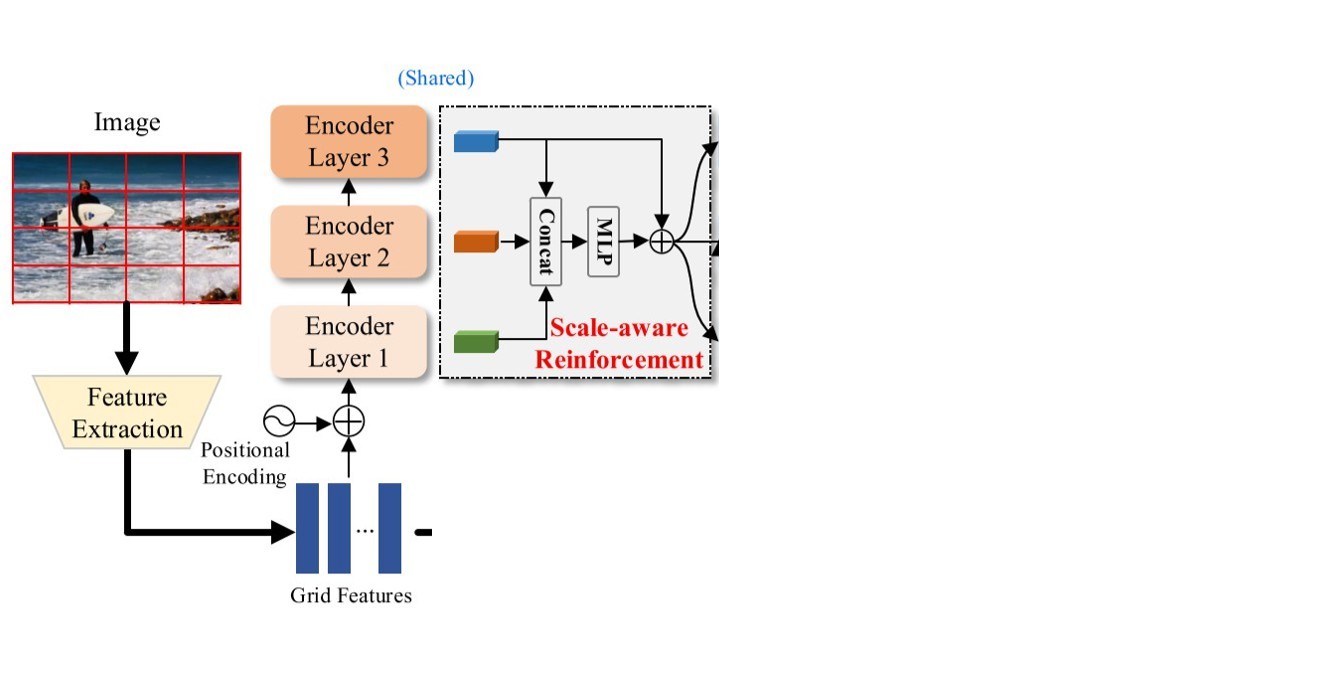

对于XE交叉熵训练,将images喂给encoder(TransformerEncoder),首先super().__init__,初始化各种属性,例如self.d_model、self.SR等,然后进入forward()函数可以把该阶段的这些步骤分为三个部分

- 编码网格特征

- 编码增强的网格特征

- 解码总的特征

if mode == 'xe':

# images = (batch_size, max_detections, dim_visual)

bs, _, vis_dim = images.size()

# Grid feature 编码网格特征

grid_enc_output, grid_mask_enc = self.encoder(images)

# Pseudo-region feature 编码增强的网格特征

# (N, num_clusters*2048) -> (N, num_clusters, 2048)

pseudo_region = self.SP(images).view(bs, -1, vis_dim)

pseudo_region_enc_output, pseudo_region_mask_enc = self.encoder(pseudo_region)

output, mask = torch.cat([grid_enc_output, pseudo_region_enc_output], dim=1), torch.cat([grid_mask_enc, pseudo_region_mask_enc], dim=-1)

# 解码总的特征

dec_output = self.decoder(seq, output, mask)

return dec_output编码网格特征

TransformerEncoder.forward()函数对input也就是images处理,包括mask、fc、dropout等,然后通过super().forward()调用父类MultiLevelEncoder.forward()方法def forward(self, input, attention_weights=None):

mask = (torch.sum(input, dim=-1) == 0).unsqueeze(-1)

out = F.relu(self.fc(input))

out = self.dropout(out)

out = self.layer_norm(out)

out = out.masked_fill(mask, 0)

# out (bs, max_dections, d_model)

return super(TransformerEncoder, self).forward(out, attention_weights=attention_weights)MultiLevelEncoder的forward()方法,得到attention_mask,喂给 $Scale-aware\ \ Reinforcement(SR)$ 模块def forward(self, input, attention_weights=None):

# input = (bs, max_detections, d_model)

# attention_mask = (bs, 1, 1, max_detections)

attention_mask = (torch.sum(input, -1) == self.padding_idx).unsqueeze(1).unsqueeze(1)

out = self.SR(input, self.layers, attention_mask, attention_weights)

return out, attention_maskforward()函数进行处理,利用注意力机制提取输入的x的特征,提取特征的语义信息。并编码

def forward(self, x, layers, attention_mask = None, attention_weights = None):

out = x

outs = []

for l in layers:

out = l(out, out, out, attention_mask, attention_weights)

outs.append(out)

outs = self.MLP(torch.cat(outs, -1))

out = 0.2 * outs + out

return outout、attention_mask等等输入到encoder层,共有 $3$ 层,每层的输入输出都相同;进入到EncoderLayer.forward()方法查考了论文 Attention Is All You Need,这里就是

encoder层前向传播的实现def forward(self, queries, keys, values, attention_mask=None, attention_weights=None):

att = self.mhatt(queries, keys, values, attention_mask, attention_weights)

att = self.lnorm(queries + self.dropout(att))

ff = self.pwff(att)

return fflayers每层的结构如下(0): EncoderLayer(

(mhatt): MultiHeadAttention(

(attention): ScaledDotProductAttention(

(fc_q): Linear(in_features=512, out_features=512, bias=True)

(fc_k): Linear(in_features=512, out_features=512, bias=True)

(fc_v): Linear(in_features=512, out_features=512, bias=True)

(fc_o): Linear(in_features=512, out_features=512, bias=True)

(dropout): Dropout(p=0.1, inplace=False)

)

(dropout): Dropout(p=0.1, inplace=False)

(layer_norm): LayerNorm((512,), eps=1e-05, elementwise_affine=True)

)

(dropout): Dropout(p=0.1, inplace=False)

(lnorm): LayerNorm((512,), eps=1e-05, elementwise_affine=True)

(pwff): PositionWiseFeedForward(

(fc1): Linear(in_features=512, out_features=2048, bias=True)

(fc2): Linear(in_features=2048, out_features=512, bias=True)

(dropout): Dropout(p=0.1, inplace=False)

(dropout_2): Dropout(p=0.1, inplace=False)

(layer_norm): LayerNorm((512,), eps=1e-05, elementwise_affine=True)

)

)concat在一起,然后通过MLP衡量各层特征的 $Contributions$ 后得到outs,这个outs包含了更 $low\ level$ 的语义信息,给它赋予权重后加在原特征上面,得到增强的网格编码特征- 输出的

out=(bs, max_detections, d_model) attention_mask=(bs, 1, 1, max_detections)

self.encoder返回out和attentions_mask,命名为grid_enc_output和grid_mask_enc

编码增强的网格特征

接下来,将原先的images作为网格特征输入给 $Spatial-aware\ \ Pseudo-supervised(SP)$ 模块,进行聚类,得到作者提出的 $Pseudo-region\ Features$ 首先进入

SP模块的forward()方法,获取到网格特征的batch_size和visual_dim(就是C_in),原本的网格特征grid的形状为(batch_size, max_detection_region=49, visual_dim),然后将grid变形为(batch_size, visual_dim, 7, 7)对特征

grid进行 $l2$ 归一化,然后做卷积运算Conv2d(bs, C_in=visual_dim=2048, h=7, w=7)->(bs, C_out=num_clusters=5, h=7, w=7)

(bs, num_clusters=5, h=7, w=7)->(bs, num_clusters, 49)

Softmax,得到soft_assign令

x_flatten=(bs, visual_dim, -1)=(bs, visual_dim, 49),再expand(num_clusters, -1, -1, -1),在第0个维度扩张为num_clusters(bs, visual_dim, 49)->(num_clusters, bs, visual_dim, 49)

permute(1, 0, 2, 3)变换形状,得到 $x\_flatten$(num_clusters, bs, visual_dim, 49)->(bs, num_clusters, visual_dim, 49)

centroids=(num_cluters, visual_dim),同样expand(49, -1, -1)(num_cluters, visual_dim)->(49, num_cluters, visual_dim)

permute(1, 2, 0)(49, num_cluters, visual_dim)->(num_cluters, visual_dim, 49)

unsqueeze(0),得到 $centroids$(num_cluters, visual_dim, 49)->(1, num_cluters, visual_dim, 49)

residual=(bs, num_clusters, visual_dim, 49)residual乘上soft_assign.unsqueeze(2),得到新的residual再对最后一个维度求和,得到

p=(bs, num_clusters, visual_dim)做一个

intra-normalization层内的归一化,再变换形状view(bs, -1)(bs, num_clusters, visual_dim)->(bs, num_clusters * visual_dim)

Pseudo Region Features

def forward(self, grids):

N, C = grids.shape[0], grids.shape[-1]

grids = grids.view(N, 7, 7, -1).permute(0,3,1,2).contiguous()

if self.normalize_input:

# across descriptor dim

grids = F.normalize(grids, p=2, dim=1)

soft_assign = self.conv(grids).view(N, self.num_regions, -1)

soft_assign = F.softmax(soft_assign, dim=1)

x_flatten = grids.view(N, C, -1)

residual = x_flatten.expand(self.num_regions, -1, -1, -1).permute(1, 0, 2, 3).contiguous() - self.centroids.expand(x_flatten.size(-1), -1, -1).permute(1, 2, 0).contiguous().unsqueeze(0)

residual *= soft_assign.unsqueeze(2)

p = residual.sum(dim=-1)

# intra-normalization

p = F.normalize(p, p=2, dim=2)

p = p.view(grids.size(0), -1)

# L2 normalize

p = F.normalize(p, p=2, dim=1)

return pPseudo Region Features,跟原始网格特征一样,输入到encoder层,输出得到pseudo_region_enc_output和pseudo_region_mask_encpseudo_region_enc_output=(bs, num_clusters, d_model)pseudo_region_mask_enc=(bs, 1, 1, num_clusters)

grid_enc_output和pseudo_region_enc_output拼接$($$concat)$到一起,得到encoder层的output(bs, max_detections, d_model)+(bs, num_clusters, d_model)=(bs, max_detections+num_clusters, d_model)

grid_mask_enc和pseudo_region_mask_enc拼接到一起,得到mask(bs, 1, 1, max_detections)+(bs, 1, 1, num_clusters)=(bs, 1, 1, max_detections+num_clusters)

解码总的特征

接下来就是将seq(就是caption)、output、mask输入到decoder层,调用TransformerDecoderLayer.forward()方法input的形状是(bs, seq_len),encoder_output的形状是(bs, max_detections+num_clusters),mask_encoder的形状是(bs, 1, 1, max_detections+num_clusters)mask_queries是输入的seq的非pad矩阵,若不是pad,则对应位置值为True,反之则为False- 形状为

(bs, seq_len, 1)

mask_self_attention为一个(seq_len, seq_len)的上三角矩阵,主对角线以上diagonal=1行的值全为1,剩余的元素全为0,再来两次unsqueeze(0)(seq_len, seq_len)->(1, 1, seq_len, seq_len)

mask_self_attention加上一个(bs, 1, 1, seq_len)的 $tensor$,包含一些[0,0,...,1,1,...,1]的矩阵,值为1代表是padding(1, 1, seq_len, seq_len)+(bs, 1, 1, seq_len)=(bs, 1, seq_len, seq_len)

mask_self_attention是一个值为0、1、2的四维 $tensor$,0代表能看见的 $token$ 或者已经预测出来的 $token$,1代表 $Mask$,2代表 $Padding$对新的

mask_self_attention做一个判断.gt(0),如果值严格大于0,则为True,否则为False然后做一个判断,

if self._is_stateful,这个只有beam_search的时候?在定义

TransformerDecoderLayer的时候,会初始化两个变量,它们会成为模型中的参数,存储在内存中,但不会参与随着梯度而更新,这样做的目的是不用每次都去计算这两个值,减少运算量self.register_state('running_mask_self_attention', torch.zeros((1, 1, 0)).byte())

self.register_state('running_seq', torch.zeros((1,)).long())self._is_stateful=True,就从内存里面将running_mask_self_attention取出来,与现在的mask_self_attemtion在最后一维$($$dim=-1)$进行拼接,产生新的mask_self_attention令

seq为从1到seq_len+1的 $tensor$,expand扩张bs次,形状为(bs, seq_len) 判断

mask_queries $tensor$ 中哪些位置的值为 $0$,若为 $0$,将seq中相同位置的值也置为 $0$ 接下来将输入的

input过一个word_embedding层,投影到d_model维度;同样的,将seq过一个position_embedding层,投影到d_model维度,再相加input和seq都是(bs, seq_len)->(bs, seq_len, d_model)out=input+seq

def forward(self, input, encoder_output, mask_encoder):

# input (bs, seq_len)

b_s, seq_len = input.shape[:2]

# (b_s, seq_len, 1)

mask_queries = (input != self.padding_idx).unsqueeze(-1).float()

mask_self_attention = torch.triu(torch.ones((seq_len, seq_len), dtype=torch.uint8, device=input.device), diagonal=1)

# (1, 1, seq_len, seq_len)

mask_self_attention = mask_self_attention.unsqueeze(0).unsqueeze(0)

mask_self_attention = mask_self_attention + (input == self.padding_idx).unsqueeze(1).unsqueeze(1).byte()

# (b_s, 1, seq_len, seq_len)

mask_self_attention = mask_self_attention.gt(0)

if self._is_stateful:

self.running_mask_self_attention = torch.cat([self.running_mask_self_attention.type_as(mask_self_attention), mask_self_attention], -1)

mask_self_attention = self.running_mask_self_attention

# (b_s, seq_len)

seq = torch.arange(1, seq_len + 1).view(1, -1).expand(b_s, -1).to(input.device)

seq = seq.masked_fill(mask_queries.squeeze(-1) == 0, 0)

if self._is_stateful:

self.running_seq.add_(1)

seq = self.running_seq

# embedding层

out = self.word_emb(input) + self.pos_emb(seq)

for i, l in enumerate(self.layers):

out = l(out, encoder_output, mask_queries, mask_self_attention, mask_encoder)

# (bs, seq_len, d_model) @ (d_model, vocab_size) = (bs, seq_len, vocab_size)

out = self.fc(out)

return F.log_softmax(out, dim=-1)out、encoder_out、mask_queries等等输入decoder层,共 $3$ 层,每层的输入和输出都相同;进入到DecoderLayer.forward()方法同样的,这里是 $Transformer$ 的

decoder实现,与原论文类似;其中,值得注意的是can_be_stateful这个参数在self_att和enc_att不同self_att = MultiHeadAttention(..., can_be_stateful=True, ...)enc_att = MultiHeadAttention(..., can_be_stateful=False, ...)

True,后者为False,这个参数代表是否将变量存储下来def forward(self, input, enc_output, mask_pad, mask_self_att, mask_enc_att):

# MHA+AddNorm

self_att = self.self_att(input, input, input, mask_self_att)

self_att = self.lnorm1(input + self.dropout1(self_att))

# (bs, seq_len, d_model) * (bs, seq_len, 1) = (bs, seq_len, d_model)

self_att = self_att * mask_pad

# MHA+AddNorm:Image

enc_att = self.enc_att(self_att, enc_output, enc_output, mask_enc_att)

enc_att = self.lnorm2(self_att + self.dropout2(enc_att))

enc_att = enc_att * mask_pad

ff = self.pwff(enc_att)

ff = ff * mask_pad

return ffinput和mask_self_att等输入到self.att层,就进入了MultiHeadAttention.forward()方法,def forward(self, queries, keys, values, attention_mask=None, attention_weights=None):

if self.can_be_stateful and self._is_stateful:

self.running_keys = torch.cat([self.running_keys, keys], 1)

keys = self.running_keys

self.running_values = torch.cat([self.running_values, values], 1)

values = self.running_values

if self.identity_map_reordering:

q_norm = self.layer_norm(queries)

k_norm = self.layer_norm(keys)

v_norm = self.layer_norm(values)

out = self.attention(q_norm, k_norm, v_norm, attention_mask, attention_weights)

out = queries + self.dropout(torch.relu(out))

else:

out = self.attention(queries, keys, values, attention_mask, attention_weights)

out = self.dropout(out)

out = self.layer_norm(queries + out)

return outMultiHeadAttention()定义的时候,会进行判断- 若

can_be_stateful=True,则将running_keys和running_values存储下来,形状为(0, d_model)

self.can_be_stateful = can_be_stateful

if self.can_be_stateful:

self.register_state('running_keys', torch.zeros((0, d_model)))

self.register_state('running_values', torch.zeros((0, d_model)))self.identity_map_reordering=True,则是先做layer normalization,后做attention,这里就有一个post lnorm和pre lnorm的概念最后返回

decoder的output,在class train_xe()中与caption_gt计算 $loss$,反向传播,更新参数

SCST训练阶段

在 $SCST$ 训练阶段,使用 $self\ critical$ 强化学习中的策略梯度方法dataloader是之前定义的dict_dataloader_train,对于dataloader遍历,得到iteration,detections,caps_gt和captions,detections: Tensor,(bs, seq_len, visual_dim)caps_gt: list,(bs),包含了batch_size个list,每个list里面存着 $5$ 条caption: str,对应着每张图片的 $5$ 条描述captions: Tensor,(bs, seq_len),存着caption对应的index

def train_scst(model, dataloader, optim, cider, text_field, scheduler_rl, e):

# Training with self-critical

tokenizer_pool = multiprocessing.Pool()

running_reward = .0

running_reward_baseline = .0

model.train()

scheduler_rl.step()

print('lr = ', optim.state_dict()['param_groups'][0]['lr'])

running_loss = .0

seq_len = 20

beam_size = 5

# kwargs = {

# 'text_flag': args.text2text

# }

with tqdm(desc='Epoch %d - train' % e, unit='it', total=len(dataloader)) as pbar:

for it, (detections, caps_gt, captions) in enumerate(dataloader):

detections = detections.to(device)

# text = captions.to(device)

# kwargs['text'] = text

outs, log_probs = model(mode='rl', images=detections, max_len=seq_len, eos_idx=text_field.vocab.stoi['<eos>'], beam_size=beam_size, out_size=beam_size)

optim.zero_grad()

# Rewards

caps_gen = text_field.decode(outs.view(-1, seq_len))

caps_gt = list(itertools.chain(*([c, ] * beam_size for c in caps_gt)))

caps_gen, caps_gt = tokenizer_pool.map(evaluation.PTBTokenizer.tokenize, [caps_gen, caps_gt])

reward = cider.compute_score(caps_gt, caps_gen)[1].astype(np.float32)

reward = torch.from_numpy(reward).to(device).view(detections.shape[0], beam_size)

reward_baseline = torch.mean(reward, -1, keepdim=True)

loss = -torch.mean(log_probs, -1) * (reward - reward_baseline)

loss = loss.mean()

loss.backward()

optim.step()

running_loss += loss.item()

running_reward += reward.mean().item()

running_reward_baseline += reward_baseline.mean().item()

pbar.set_postfix(loss=running_loss / (it + 1), reward=running_reward / (it + 1), reward_baseline=running_reward_baseline / (it + 1))

pbar.update()

loss = running_loss / len(dataloader)

reward = running_reward / len(dataloader)

reward_baseline = running_reward_baseline / len(dataloader)

return loss, reward, reward_baselinemode,detections、max_seq_len、beam_size等等喂给model(),进入到Transformer.forward()方法;判断是rl模式,初始化BeamSearch,进入apply方法elif mode == 'rl':

bs = BeamSearch(self, max_len, eos_idx, beam_size)

return bs.apply(images, out_size, return_probs)model()返回outs 和log_prob,前者就是token的index;生成对应的sequence,与caps_gt计算reward和reward_baseline,然后计算 $loss$,反向传播更新参数一个 $epoch$ 结束后,计算 $Validation\ Loss$ 与各指标

# Validation loss

val_loss = evaluate_loss(model, dataloader_val, loss, text_field, e)

writer.add_scalar('data/val_loss', val_loss, e)

# Validation scores

scores = evaluate_metrics(model, dict_dataloader_val, text_field, e)

val_cider = scores['CIDEr']

print("Validation scores", scores)

writer.add_scalar('data/val_cider', val_cider, e)

writer.add_scalar('data/val_bleu1', scores['BLEU'][0], e)

writer.add_scalar('data/val_bleu4', scores['BLEU'][3], e)

writer.add_scalar('data/val_meteor', scores['METEOR'], e)

writer.add_scalar('data/val_rouge', scores['ROUGE'], e)# Test scores

scores = evaluate_metrics(model, dict_dataloader_test, text_field, e)

test_cider = scores['CIDEr']

print("Test scores", scores)

writer.add_scalar('data/test_cider', test_cider, e)

writer.add_scalar('data/test_bleu1', scores['BLEU'][0], e)

writer.add_scalar('data/test_bleu4', scores['BLEU'][3], e)

writer.add_scalar('data/test_meteor', scores['METEOR'], e)

writer.add_scalar('data/test_rouge', scores['ROUGE'], e)# Prepare for next epoch

best = False

if val_cider >= best_cider:

best_cider = val_cider

patience = 0

best = True

else:

patience += 1

best_test = False

if test_cider >= best_test_cider:

best_test_cider = test_cider

best_test = True

switch_to_rl = False

exit_train = Falseif patience == 5:

# xe stage train 15 epoches at least

if e < args.xe_least:

print('special treatment, e = {}'.format(e))

use_rl = False

switch_to_rl = False

patience = 0

elif not use_rl:

use_rl = True

switch_to_rl = True

patience = 0

optim_rl = Adam(model.parameters(), lr=1, betas=(0.9, 0.98))

scheduler_rl = LambdaLR(optim_rl, lambda_lr_rl)

for k in range(e-1):

scheduler_rl.step()

print("Switching to RL")

else:

print('patience reached.')

exit_train = True

if e == args.xe_most:

# xe stage no more than 20 epoches

if not use_rl:

use_rl = True

switch_to_rl = True

patience = 0

optim_rl = Adam(model.parameters(), lr=1, betas=(0.9, 0.98))

scheduler_rl = LambdaLR(optim_rl, lambda_lr_rl)

for k in range(e-1):

scheduler_rl.step()

print("Switching to RL") if switch_to_rl and not best:

data = torch.load(os.path.join(args.dir_to_save_model, '%s_best.pth' % args.exp_name))

torch.set_rng_state(data['torch_rng_state'])

torch.cuda.set_rng_state(data['cuda_rng_state'])

np.random.set_state(data['numpy_rng_state'])

random.setstate(data['random_rng_state'])

model.load_state_dict(data['state_dict'])

print('Resuming from epoch %d, validation loss %f, best_cider %f, and best test_cider %f' % (data['epoch'], data['val_loss'], data['best_cider'], data['best_test_cider']))torch.save({

'torch_rng_state': torch.get_rng_state(),

'cuda_rng_state': torch.cuda.get_rng_state(),

'numpy_rng_state': np.random.get_state(),

'random_rng_state': random.getstate(),

'epoch': e,

'val_loss': val_loss,

'val_cider': val_cider,

'state_dict': model.state_dict(),

'optimizer': optim.state_dict() if not use_rl else optim_rl.state_dict(),

'scheduler': scheduler.state_dict() if not use_rl else scheduler_rl.state_dict(),

'patience': patience,

'best_cider': best_cider,

'best_test_cider': best_test_cider,

'use_rl': use_rl,

}, os.path.join(args.dir_to_save_model, '%s_last.pth' % args.exp_name))if best:

copyfile(os.path.join(args.dir_to_save_model, '%s_last.pth' % args.exp_name), os.path.join(args.dir_to_save_model, '%s_best.pth' % args.exp_name))

if best_test:

copyfile(os.path.join(args.dir_to_save_model, '%s_last.pth' % args.exp_name), os.path.join(args.dir_to_save_model, '%s_best_test.pth' % args.exp_name))if exit_train:

writer.close()

break参考

最后编辑于:2022 年 10 月 09 日 09:28